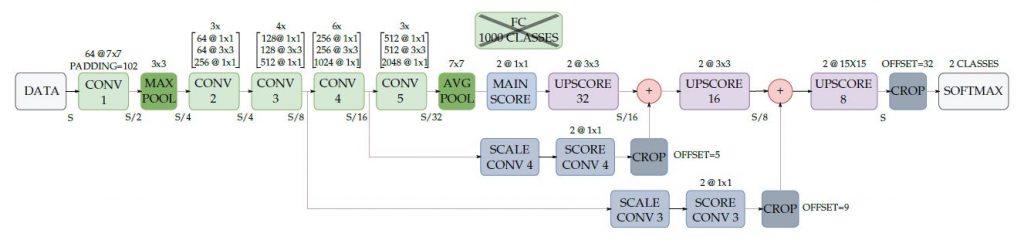

The INVETT Research Group of the University of Alcalá has developed a Deep Learning system for accurate road detection using the ResNet-101 network with a fully convolutional architecture and multiple upscaling steps for image interpolation. It is demonstrated that significant generalization gains in the learning process are attained by randomly generating augmented training data using several geometric transformations and pixel-wise changes, such as affine and perspective transformations, mirroring, image cropping, distortions, blur, noise, and colour changes. In addition, this work demonstrates that the use of a 4-step upscaling strategy provides optimal learning results as compared to other similar techniques that perform data upscaling based on shallow layers with scarce representation of the scene data. The complete system is trained and tested on data from the KITTI benchmark and besides it is also tested on images recorded on the Campus of the University of Alcala (Spain). The improvement attained after performing data augmentation and conducting a number of training variants is really encouraging, showing the path to follow for enhanced learning generalization of road detection systems with a view to real deployment in self-driving cars. Figure 1 shows the general architecture of the propose DEEP-DIG system.

Figure 1. High-level schematic of the DEEP-DIG CNN-based road detector, implementing the FCN-8s architecture on a ResNet.

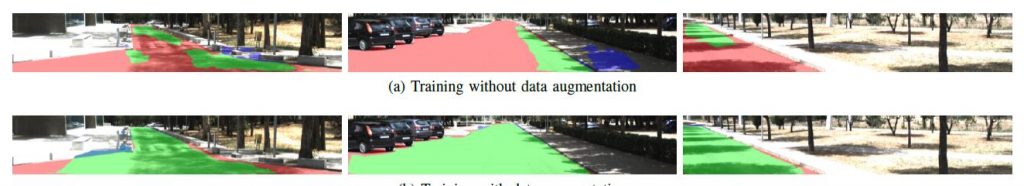

The proposed network has been trained on the KITTI dataset, which is composed of 289 images manually labelled with two classes (road/non-road). 50% of the images are used for training the net, and the remaining 50% are kept aside for validation. More specifically, the ResNet model previously trained on ImageNet is used for weight initialization, and then the full net is fine-tuned on the road detection task. The training is run for 24K iterations, with validation checkpoints every 4K iterations. The Caffe framework has been used for the network prototype definition and the control of training and testing processes. Instead of passing the original image to the network, some data augmentation operations are applied to extend the training set, prevent overfitting and make the net more robust to image changes. The data augmentation layer runs on CPU, and the rest of the processing can be done on GPU. It takes between two and three hours to complete the standard training on a single Titan X GPU. Data augmentation techniques can be very useful to extend the training set. An on-line augmentation approach is adopted. This way, the network never sees the same augmented image twice, as the modifications are performed at random each time. Besides, this virtually infinite dataset does not require extra storage space on disk. Moreover, data augmentation plays an important role in making the net more robust against usual changes that appear in road images, such as illumination, colour or texture changes, or variations in the orientation of the cameras. One of the main weaknesses of CNNs is their dependence on the previous training data. With data augmentation a better generalization can be achieved and different road conditions can be simulated. The data augmentation techniques that have been implemented in this work have been: a) Geometric transformations: image cropping, random affine transformation, perspective transformation, mirroring, distortion; b) Pixel value transformations: noise, blur, random colour changes in HSV and RGB. The effect of data augmentation in practice is huge, as depicted in figure 2, where the upper row (a) shows the road segmentation result after training without data augmentation, while the bottom row (b) shows the same result with data augmentation. As can be appreciated, data augmentation really makes a difference in the correct interpretation of the road scene in terms of generalization capability.

Figure 2. The qualitative results of the proposed model are coloured as follows: TP in green, FP in blue and FN in red. The scenarios with strong illumination changes and challenging road textures are better detected in the model trained with data augmentation.

DEEP-DIG is the result of an in-depth analysis of a deep learning-based road detection system. It starts with the ResNet-50 network model with a fully convolutional architecture and three interpolation steps, fine-tuned in perspective KITTI images. Several variations are then introduced to improve the training: data augmentation, training in BEV space, tuning training parameters, using deeper models and other up-sampling architectures. Data augmentation offers a significant improvement between 1% and 2% in F-measure, and thus it is included in the rest of variations. These can lead to an additional consistent improvement over 0.5% if they are properly combined. Finally, the use of a ResNet-101 model with a four-step up-sampling scheme, trained directly in BEV with data augmentation improves our results up to 96.31% on the validation subset, and achieves state-of-the-art results on the testing set. Nevertheless, the appropriate configuration depends on the final application and the trade-off between performance and computing capacity. For future work, a post-processing layer will be added into the system to obtain smoother results and also high level information provided by digital navigation maps will be included to solve some of the problems described before. The operation of the DEEP-DIG system is graphically illustrated in the video that can be seen at: https://youtu.be/-7faqzBH82c